“Read my lips.” That’s a lot easier said than done. It’s a difficult skill to master in part because only about 30% of speech is considered “visible”. Even the best lip readers can only understand somewhere between 40% and 60%, and those figures are open to question.

Put another way, it means that about half the time they are wrong. A point illustrated by an episode of “Seinfeld” where Jerry is dating a deaf woman who relies on lip reading. He asks her out, and offers to pick her up, “How about six?”. She looks angry, offended and then leaves. Jerry discovers later that she thought he had said “sex” instead of “six”.

Now comes news that the University of Oxford in partnership with Google’s DeepMind artificial intelligence program has come up with a system that may help clear up the confusion.

Their AI system was taught to lip read using some 5,000 hours of BBC television clips. The system scanned people’s lips learning to read them, and it got better and better at it. In fact, the AI correctly “read” about 47% of what was being said without making a mistake. By comparison human lip readers barely managed to get 12% right.

You can try it yourself. Here is one of the silent BBC clips.

The AI scanned inside the red square and produced these captions.

It’s a breakthrough that opens up some intriguing possibilities according to another team of researchers at Oxford who are working on a similar system called LipNet.

‘Machine lip readers have enormous practical potential, with applications in improved hearing aids, silent dictation in public spaces, covert conversations, speech recognition in noisy environments, biometric identification, and silent-movie processing.’ -LipNet Research Report

By parsing that statement you can imagine a few scenarios: One day you may be able to look at your phone and mouth a command to Siri without speaking. Or you might point your phone’s camera at someone in a noisy room, and have what they are saying dictated directly into your hearing aids via bluetooth.

More ominously it may offer new secret surveillance tools that can “listen” in on distant conversations. Combine that with facial recognition software and you have a great plot twist in a spy thriller.

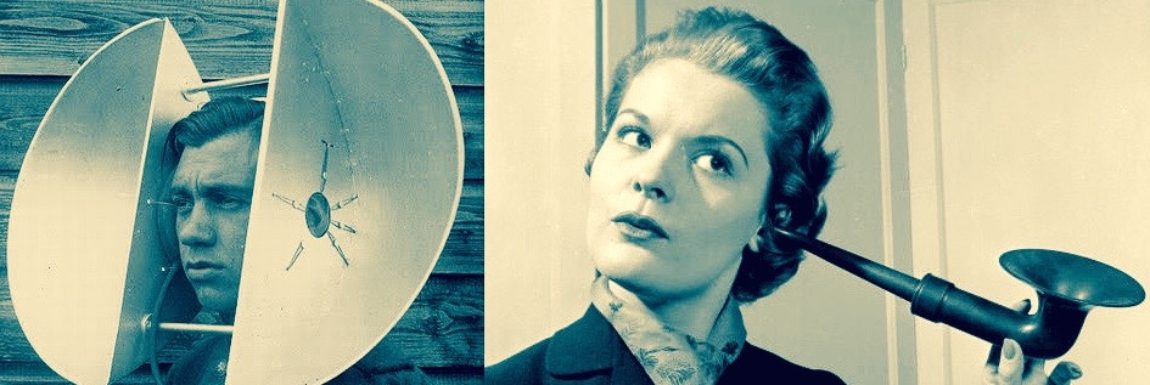

In the meantime, a few tips on lip reading. Actually, the correct term these days is “speech reading” because it involves reading not just lips but facial expressions and gestures.

Anyone with hearing loss is already something of a speech reader since your brain is constantly searching for clues about what is being said. Your may notice how much easier it is to understand someone if they are facing you directly and in a well lit space.

So to a large extent it’s intuitive but it’s also a skill that can be improved. For online training, try Lipreading.org.